Ralph Wiggum, Abundance and Software Engineering

I’ve been enjoying my break from work and using this time to overwhelmingly use LLMs for writing code, learn how others use them and explore what my thoughts are. The Ralph Wiggum phenomenon by Geoff Huntley or agent loops more generally, inspired me to write this post. There’s a bit of a self-discovery involved as I use these tools more and more and that’s something I wanted to write about since I feel many might be in the same boat. I hope this post clicks something for them.

Using LLMs before November 2025

At my previous job, I used LLMs for writing code with Cursor, Claude and an internal tool we had that could use a bunch of models underneath. In addition to these, I’ve been using LLMs for refining my thought, understanding things I don’t know, summarization, rewording/editing something and at times even question and criticize me for my stance on something. If anything, LLMs have definitely been a productivity boost as they have helped with discovery when it comes to tech. After all, when your homelab is throwing a weird error because the Oculink connection to the GPU isn’t setup properly, an LLM is the tool I go to first these days, since often, it can help me resolve the problem sooner than going through several forums.

Cautiously Optimistic

Before we begin, a few things about me and my stance on AI - the current iteration of it - LLMs. I’ve not been a skeptic, I’ve been cautiously optimistic. One of my first encounters with AI was Github Copilot which I subscribed to when it first launched and used it quite diligently for the first few weeks. It was a neat experience for sure - I already relied heavily on snippets and code completion in my editor and Copilot was genuintely nice since I had to type lesser. I was working on Golang at that time but I wish I had been working on Java to have appreciated it even more. Over time, I graduated to primarily using ChatGPT and Claude for brainstorming and writing snippets of code.

My tryst with LLMs for writing code has been pretty similar up until November 2025. I’d fire up Claude (or similar) on the web, ask it to implement a function or two with a prompt, refine the output, ask it to explain if I don’t understand something, take that output back to my editor and integrate it with the rest of the application. Occasionally, I used Claude code at work mostly to fix annoying tests in React/Typescript that I didn’t want to spend time on. These largely worked, although there were a few instances where Claude pretty much chose to comment out the test and claimed it fixed the issue. I remember chuckling and then rolling up my sleeves to dig in manually but overall I was very impressed that here is a tool that is getting things done that I don’t have any intention to work on. So, essentially, I was still the driver of the car and I used LLMs to largely piece together things and used it as a faster typewriter, and occasionally for discovery and implementing things that involved a little more time and research.

Concerns

And, trust me - I’ve had my concerns about AI and LLMs too. As much as these are impressive, they’re still next-token-predictors and the fact that they can predict the next token is because they’ve been trained with an inordinate amount of data - code, books, reddit, blogs, wikipedia etc. At this point, a trained and aligned LLM is definitely one of the best compressed capsules of humanity that we can possibly send in a future golden record to space. However, a lot of this data has been trained dubiously although we had a recent verdict in 2025 that Anthropic’s training of LLMs using books is legal and fair-use. I’m sure, we’ll get more and more such litigations going to court in 2026 and beyond and this definitely sets a precedence as you’ll see more people essentially (attempting to) recreate companies over a weekend, as Geoff Huntley points out in this video.

Building companies over the weekend on a beach

We still don’t know the true cost of using these tools. We can predict the cost of inference and training looking at the information shared by the leading model providers, but this is only a very small part of the picture. Without data, LLMs can’t work and while some data has a sticker price to it, we don’t know what the true value of a large part of the data is.

And don’t get me started on privacy and safety in general. Even experienced folks like Steve Yegge squirm when you point LLMs to your production systems and ask it to do something. Note that production is subjective here. If you’re asking Claude Cowork to go organize your PDFs in your Downloads directory, be warned that there’s a non-zero probability that this can delete files. This is a classic case of alignment mismatch in LLMs that folks are beginning to consider as features and not bugs. After all, a small misalignment in a domain can cause erratic behavior in unrelated domains. So, an LLM that is expected to be whimsical when mimicking a stand-up comic can potentially have incorrect behavior when organizing your files.

In spite of these concerns, there’s no denying that these tools are magical to someone who knows how to wield them. While I’ve always tinkered with one thing or the other as an engineer, using an LLM has definitely given a lot more polish to my tools where I rely on them to embellish and make things better when I lose interest.

Abundance Mindset

Before the world was taken over by LLMs, there was web3 - NFTs, blockchains and the like. I was first introduced to Bitcoin in 2013 when I was a regular visitor to Hacker Dojo in Mountain View when it was still on Fairchild Dr. This was a magical place where I could just go walk-in, pay $20 and then use the facility for a few hours. At the reception was this small machine where one could exchange dollars for bitcoin. This was the time when I had just began to work and was still paying loans for my education. Sure, I could’ve spared a few bucks to buy a bitcoin or two (it was < $100 during this time), but the conservative in me said an emphatic no to it. I also remember reading the Bitcoin paper during this time and thinking to myself - this is ingenious but … incredibly wasteful too. I’m conservative and risk-averse by default and even to this day, I switch off lights and fans when they’re not used, I choose to run my washer/dryer during off-peak hours and I shudder when my kid doesn’t turn off the bathroom vanity lights after brushing. Of course, being in California, my fears are not unfounded, thanks to PG&E. My theory is that one has to have an abundance mindset to even conceive of something like this in the first place.

Coming back to LLMs, the training and inference of these are no-different from an energy usage standpoint from the proof of work model of Bitcoin. We have had a ton of investment pouring into data centers and energy subsidies for companies for training and inferencing these models. And the Ralph Wiggum phenomenon takes writing code to the next level. If you’re on a Claude Code Pro/Max subscription, you can actually get quite a lot done and the claim is that these are definitely underpriced today. There are many CEOs imploring their employees to start using these tools, in spite of these costing a huge dent in their opex, like Sentry’s CEO, David Cramer who sent a memo to his employees earlier this month. He acknowledges that the budget for engineering has gone up but it’s worth it and they’ll deal with it in the future.

This phenomenon picked up steam over the holiday break and was made popular by Anthropic’s new plugin - Ralph Loop but originally conceived by Geoff Huntley a few months earlier. It’s also fascinating that folks like Steve Yegge have also converged on this way of using LLMs to write code for you. Essentially, it works by the way of writing a spec file - in fact, you don’t write it yourself - you ask the LLM to generate it for you. Then have the LLM write code, write tests and run them. And run this in a loop with a max iteration bound and a success criteria - something like 80% of tests should pass. The most important thing is you ought to start a new prompt every single time to avoid context rot and get bitten by the lost-in-middle problem. The expectation is that every single iteration brings you closer to your target.

Future of Software Engineering

When you first use agentic loops (I’ll refer ralph-wiggum as agentic loops from here on since that’s a more generic name) for writing code as a conservative person, you’ll definitely think you’re using a jackhammer to assemble a piece of Ikea furniture. Yes, it is indeed crude and over time, we’ll have more refined tools, likely even new programming languages that are aimed to reduce the number of tokens - akin to json -> protobuf for reducing the number of bits on the wire. But until then, we’re stuck with these tools and they work great with a few rough edges. If you feel icky using them, mind you that there’re people who don’t feel so. And many of us often are frustrated using these tools. It’s the norm today.

When I started writing toy applications using agentic loops, I hadn’t thought of the economics behind it but I was surely taken aback when Geoff mentioned that he ran a loop for 24 hours using Claude’s API access and the math worked out to be around $10.42 per hour. This is cheaper than California’s hourly wage which is $16.90. Again, this is today’s numbers and we don’t know what the numbers are going to be going into the future (surge pricing anyone?). And we already know that as the edge of inference expands, the number of tokens these models need and also emit is going up for the same task. But here’s the kicker - even if the underlying models don’t increase manifold from here and we’re stuck with Claude Sonnet/Opus 4.5, it’s still surprisingly amazing for a lot of tasks and you can eliminate a lot of gruntwork by having the LLM do it for you.

Not too long ago, I was at a lunch-and-learn session at work where someone was going over their experiences with using an LLM for writing code and a shrewd manager-of-managers asked them - can I run an army of these to work on all my teams’ jira boards and have stuff done. I don’t think their intention was to fire everyone in their org but to trim the fat - they’d rather retain people who are getting 10x work done for the same time. So, the writing is definitely on the wall - learn to use these tools if you want to work in corporate. I liken this to industrialized furniture makers vs exotic/one-of-a-kind furniture makers. Sure, a handful of really popular furniture makers who bring a lot of craft into what they do and build unique pieces would stand out and succeed but they will not be affordable to the average common man who’d just go to Wayfair or Ikea. This is also not the perfect analogy when it comes to writing software though - software is mostly write-once, sell multiple-copies. And the innards of a piece of software are more complex than a piece of furniture. You might choose to slap a more slicker UI (partly LLM written) on a mostly LLM written backend and make a killing. But the kicker is - anyone can do this. The only thing that is between you and solving a problem is agency and the will to do it!

So, what is the moat, you ask? Don’t get me wrong here, nobody can predict the future. So take whatever I say with a grain of salt. My take for large SaaS companies is likely compliance/privacy but these are relatively short-lived too. Lobbying would be definitely required by mega companies to prevent incumbents from dethroned - it’s definitely going to be ugly. But there’s a more genuine way of doing things - companies that stand out are the ones that delight their users. I love this word in Tamil - விருந்தோம்பல் / virunthombal which translates to hospitality. A company or entity that truly cares about its users and offers a good e2e experience is likely to go far. Of course, you’ll still have people preferring Wayfair, but at some point inertia is likely to kick-in for large businesses.

Dax Raad is another wonderful engineer who I look up to and learn from. He precisely lays it down in this talk. Think of selling software as a funnel - things like marketing, getting someone to their aha moment, retaining them are harder problems now. Yes, there are AI tools to assist you here but these are largely still human decisions - we’ll eventually get to a point when agents will do all of these and I truly don’t know what the end-game there is. People think we’ll all be sitting on beaches and sipping margaritas while agents do our bidding and earn a living for us but I don’t think a large % of humans are capable of that. 😂

What should you do as an engineer?

I love working out and in recent times, I’ve been incorporating a lot of kettlebells in my workout. There’s a popular saying that you should never come in the way of a kettlebell and instead, move yourself around it. I look at the industry’s reaction to using LLMs for writing code as inevitable and is like a kettlebell swinging in front of you. The world will increasingly move towards this in 2026 and beyond and we’ll get to a point where we’ll rely on these more and more. Sure, we can live with just candles and fireplaces if there was no electricity - yes it would be a discomfort for most of us, but we won’t die. The same thing will come for writing code - you can type every single letter of your program or you orchestrate an army of agents to do your bidding by providing clearly what you intend to achieve.

And in the marketplace of selling your skills to get employed, the one who gets things done effectively and efficiently will always win.

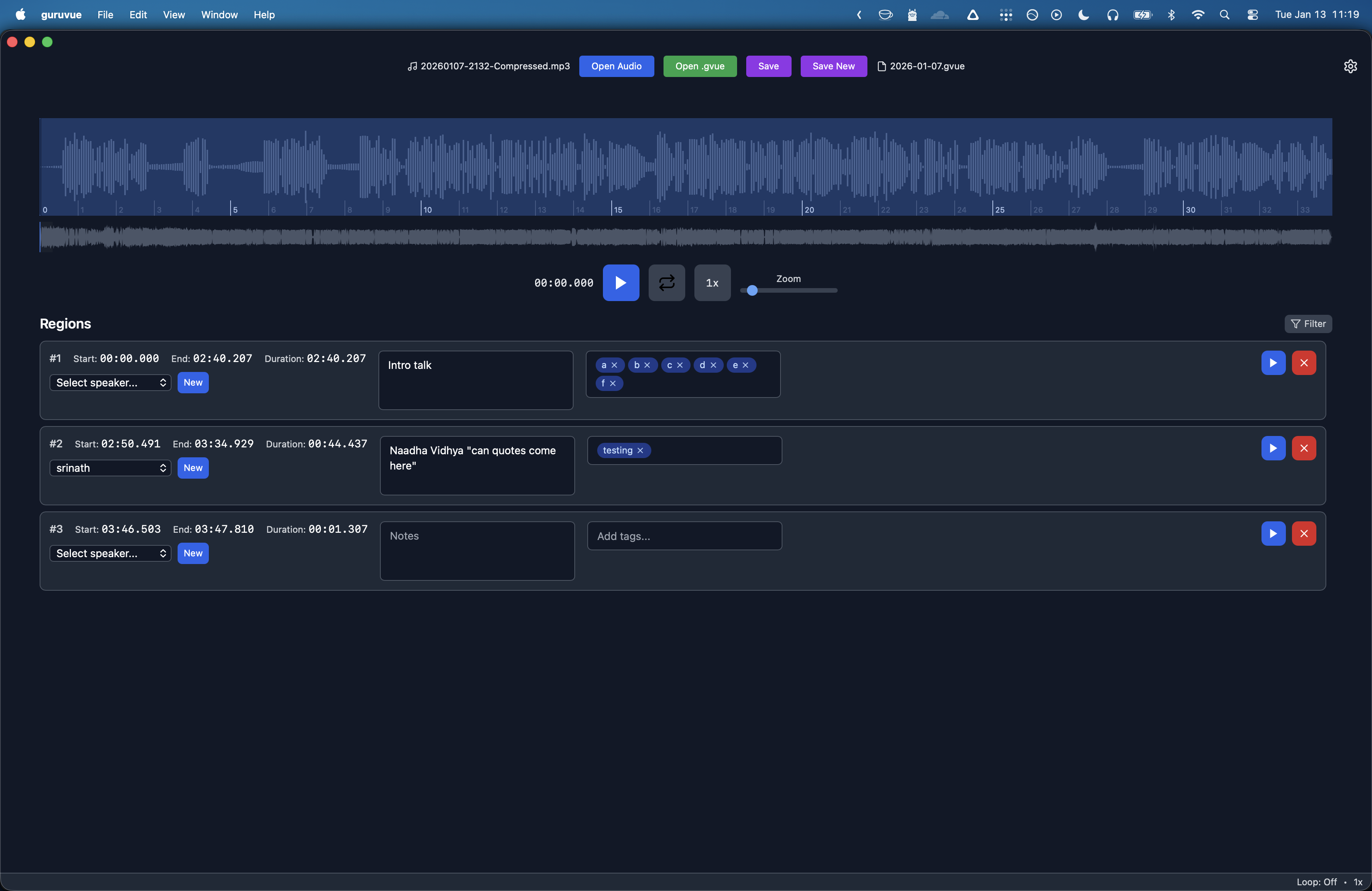

guruvue, a tool to annotate, classify and analyze music lesson recordings that I've been building pretty much entirely through Claude

antirez, the author of redis is another amazing engineer who I look up to and he calls it beautifully in this post.

I would not respect myself and my intelligence if my idea of software and society would impair my vision: facts are facts, and AI is going to change programming forever. … it is now clear that for most projects, writing the code yourself is no longer sensible, if not to have fun.

I 100% agree. If you’re writing software for a company, you better learn to use these tools and think how you can become productive. If you think - I’m a systems engineer and I’m safe - well, antirez has something for you as well.

the more isolated, and the more textually representable, the better: system programming is particularly apt

No wonder there are already vibe coded operating systems on Github. Sure, these are still toy ones and are not going to replace Linux on servers anytime soon but still it’s astonishing to see where one can land with just a few hours with these tools.

And yes, some of you might be still feeling squirmish to use these tools, but the writing is on the wall, unfortunately.

However, it’s not all rosy for sure. Antirez, has this caution for us.

Test these new tools, with care, with weeks of work, not in a five minutes test where you can just reinforce your own beliefs. Find a way to multiply yourself, and if it does not work for you, try again every few months.

Never, never, never point a tool to your only copy of the database or production. It’s still a chimp with a lot of high precision tools. With careful handholding and guidance, it can extract a tooth. But without careful supervision, it can definitely go for your jugular.

And some of us might be feeling dejected. Antirez is there to assuage us.

Yes, maybe you think that you worked so hard to learn coding, and now machines are doing it for you. But what was the fire inside you, when you coded till night to see your project working? It was building.

Writing software has always been a means to an end - the most important thing is does it solve a problem. This is what a piece of software should do when it’s built within the confines of a company. If you’re someone who takes an afternoon on a weekend to learn about shaders and implement something that is not used anywhere, you should absolutely still do it and show the world what you did with it. But if you’re building something for an employer, there’s no reason why you shouldn’t use these tools to make progress and add business value to your employer!

And I’d even argue that if you’re building something for personal growth, you should still use these tools to eliminate grunt work and for learning. LLMs are great at learning new things. What’s not changing is good judgement and understanding CS fundamentals. Over the past month, I’ve learnt a thing or two about React purely by working with my LLM and asking it explain if I don’t understand something. I’ve also not made it a point to not blindly accept what the LLM says and have made sure to verify the docs from time to time. This has helped me learn new things faster and I’m sure I’ll keep doing these going forward. If you write software for a living, make sure to dedicate a few hours every month to just explore a new realm of CS that you hadn’t ventured into before and use LLMs for it.

Needless to say, there’s definitely no alternate to knowing the basics and understanding things from first principles. But typing code letter by letter is not it for me anymore. I’d rather use the time to do some higher-order thinking.